Concepts of Caching : Introduction to Caching

Introduction

Caching is a concept that often goes unnoticed but plays a crucial role in optimizing system performance. Whether it’s speeding up network calls, reducing disk I/O, or minimizing computational overhead, caching is the unsung hero that makes these operations more efficient. In this blog, we’ll delve into these:

What is Caching?

Basic Caching flow

Key Points in caching

Caching at different level

Consideration when using a cache

What is Caching?

Caches are anything that helps you avoid an expensive netwark I/O, disk I/O, or Computation. LIKE

Example : API call to get profile Information on Instagram/Social Media : By storing this data temporarily, in a cache we can significantly reduce the time it takes to load a user’s Insta profile and hence reduce latency.

At its core, caching is all about storing a subset of data in a “closer” and faster storage medium, so you don’t have to fetch it from the main database every time. It’s like having a personal chef who already knows your favorite meals. Instead of going through the entire menu each time, the chef has your go-to dishes prepped and ready to serve, cutting down your wait time significantly.

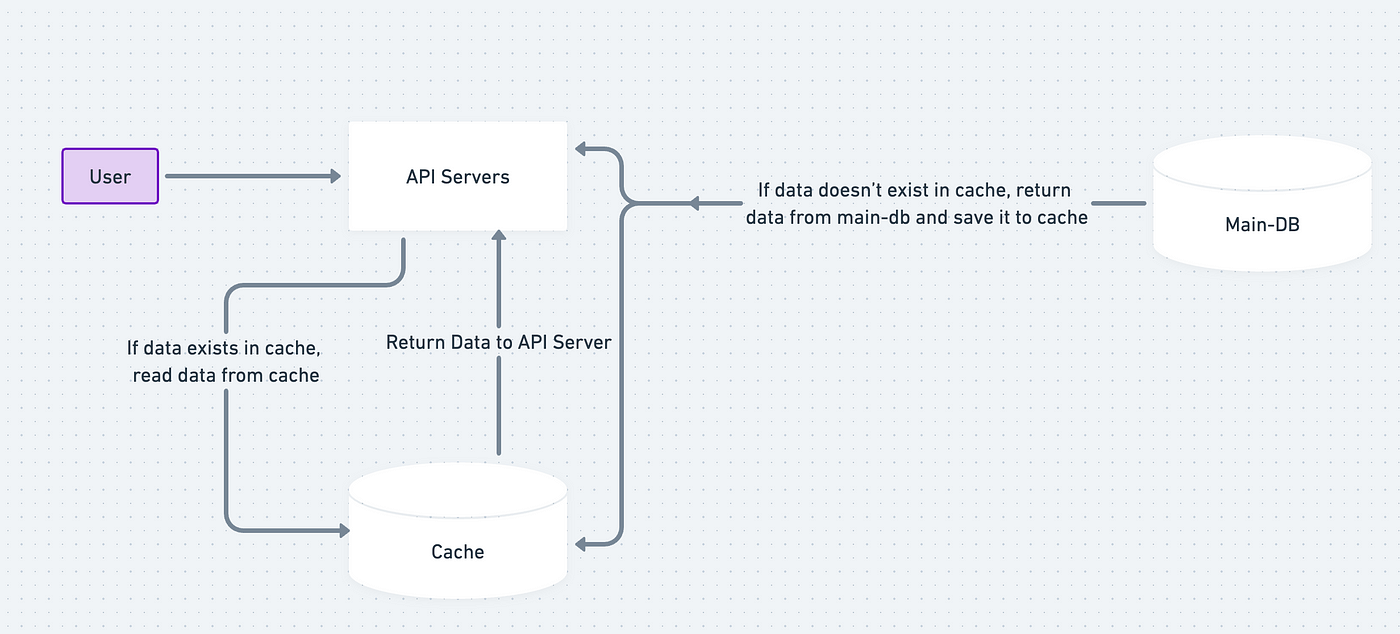

Basic Caching Flow:

User sends a request for data.

Request goes to the Cache first, 2 outcomes are possible:

Cache Hit: Cache returns the data to the User.

Cache Miss: Request is forwarded to the Main Database , Main Database returns data to Cache and User.

Cache stores the data for future requests.

Key Points:

Caching helps avoid expensive network calls, disk I/O, or computations.

Not all data is cached — only the subset most likely to be accessed.

Caching solutions commonly used include Redis and Memcache.

Caching at different level

Browser Caching : Your Personal Assistant

We can cache static assets and cookies

Benefits: Reduces latency and saves bandwidth, enhancing user experience.

CDN: The Local Grocery Store of the Internet

We can cache Static assets like Images and Videos

Benefits : Keeps data closer to the user, reducing long network delays.

Remote Centralized Cache: The Communal Toolbox

Data from centralized solutions like Redis or Memcache can be cached.

Benefits : Saves you from making repeated trips to the database.

Considerations while Caching

When to Use Cache

Consider caching when data is read frequently but modified infrequently. Remember, cache servers are not ideal for persisting important data.

Expiration and Eviction Policies

Implement an expiration policy to remove stale data. For cache eviction, popular policies include LRU, LFU, and FIFO.

Consistency Matters

Keeping your data store and cache in sync is crucial. This becomes even more challenging when scaling across multiple regions.

Mitigating Failures

Avoid a single point of failure by using multiple cache servers across different data centers. Overprovisioning memory is also recommended.

Real-World Examples

Social Media Feeds: Your social media home feed is a prime example of caching. The posts you see are pre-fetched and stored temporarily so that you don’t have to wait for them to load each time you open the app.

E-commerce Websites: Product recommendations and recently viewed items are often cached to provide a faster and more personalized shopping experience.

Conclusion

Understanding caching is vital for anyone looking to optimize system performance. From API calls to live streams, caching is the backbone that allows for quicker data retrieval and better user experience.